For those who are visually impaired, doing the simplest tasks and activities isn’t always easy to complete.

If a blind person can give a robot a voice command to do a simple task, such as “Please open the fridge, pick up the water bottle and bring it to me,” it can change his or her life.

For Cal State Fullerton’s technology researchers Kiran George, Anand Panangadan and their students, they are working to do just that by using assistive technology to improve the quality of life for blind veterans.

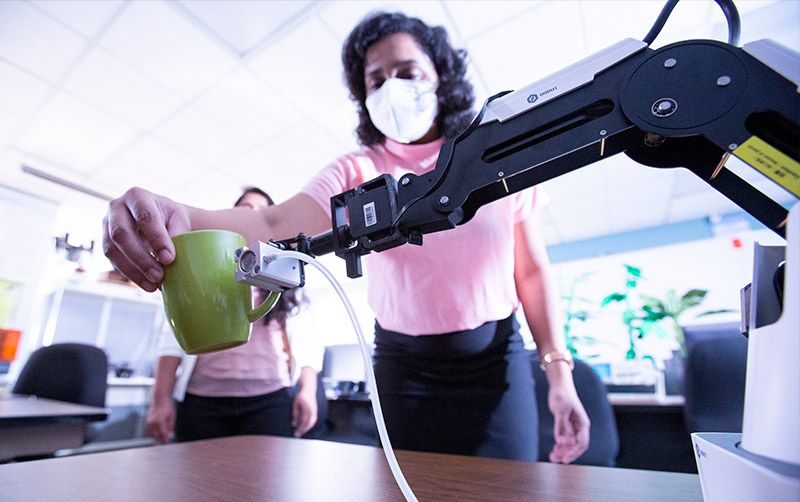

The researchers are developing a low-cost, voice-controlled robotic arm to assist visually impaired veterans in performing routine daily activities, such as opening a door and retrieving items from the refrigerator.

The project is funded by a nearly $200,000 grant from the U.S. Department of Veterans Affairs. George is directing the hardware aspects of the project, including building the robotic arm, interfacing various sensors and cameras to the arm and training the arm to perform tasks.

“The robotic arm utilizes imitation learning, whereby it can be trained to do tasks by manually guiding it the first time, and it will remember it the next time,” said George, professor of computer engineering who is directing the project. “This project has the potential to reduce the stress on caretakers and family members of blind veterans on a daily basis.”

Panangadan, assistant professor of computer science who is co-directing the project, is handling the natural language processing aspects of the project, such as deciphering the user’s commands for the robotic arm.

At the end of the 14-month project, which began this fall semester, the faculty-student team plans to have a functional prototype of a “Robotic Aid System,” said George, the university’s 2020 Outstanding Professor who directs the Bio-Electric Signal Lab in the College of Engineering and Computer Science.

Hands-On Student Learning

Graduate students Rashika Natharani, computer science major, and Devaj Parikh and Ruchi Ramesh Bagwe, both computer engineering majors, are working on the project. Undergraduate researchers are Kayla Lee, Henry Lin and James Samawi, all computer engineering students.

Bagwe, who has eight years of data-driven industrial experience and has published several research papers based on machine learning and deep learning, is working on building a language-based communication system for the robot that can translate unstructured spoken language into the robot’s action.

“The robotic field is rapidly changing and robots have become a routine part of our daily lives,” said Bagwe, who plans to pursue a doctorate in natural language processing for machines. “This field involves a combination of creativity, along with engineering and technology.”

Natharani is working on designing speech recognition for the robot and developing a system that is responsive and intuitive to the user’s voice commands.

“The idea of making technology more accessible to those in need and to help them with routine tasks makes this project intriguing for me,” said Natharani, who plans to pursue a career as a machine learning engineer. “This project is the intersection of two of my favorite fields: artificial intelligence and robotics. I wanted to combine my knowledge of these fields to come up with a technologically powerful and multidisciplinary product.”

For the students involved in the project, they can relate the theoretical concepts they learn as part of their coursework and apply it to real-world applications.

“The experience provides them with new skill sets, such as the ability to effectively brainstorm solutions, research problems and implement them, and develop interpersonal skills when working with fellow researchers,” George said. “From my experience, employers greatly value such skills in their employees. Most importantly, their hands-on experience leads to an engineering workforce that is more enthusiastic, purposeful and productive.”